Selected publications

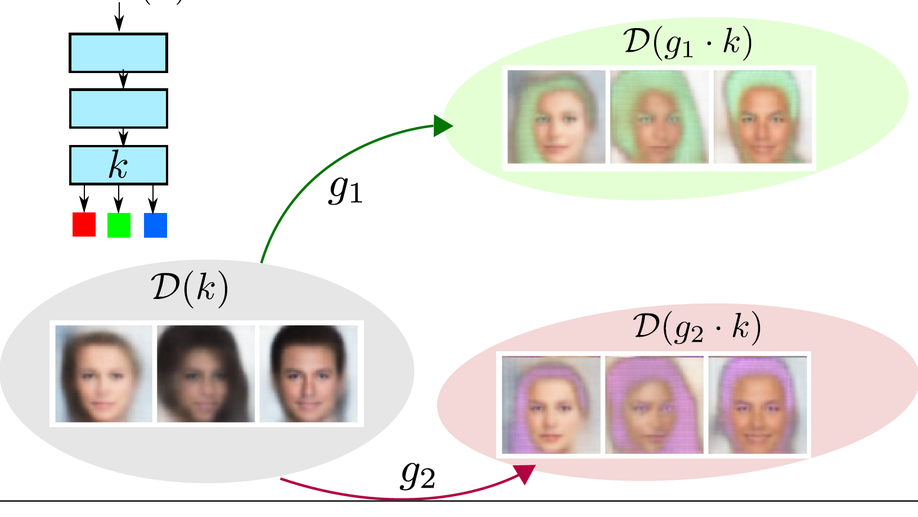

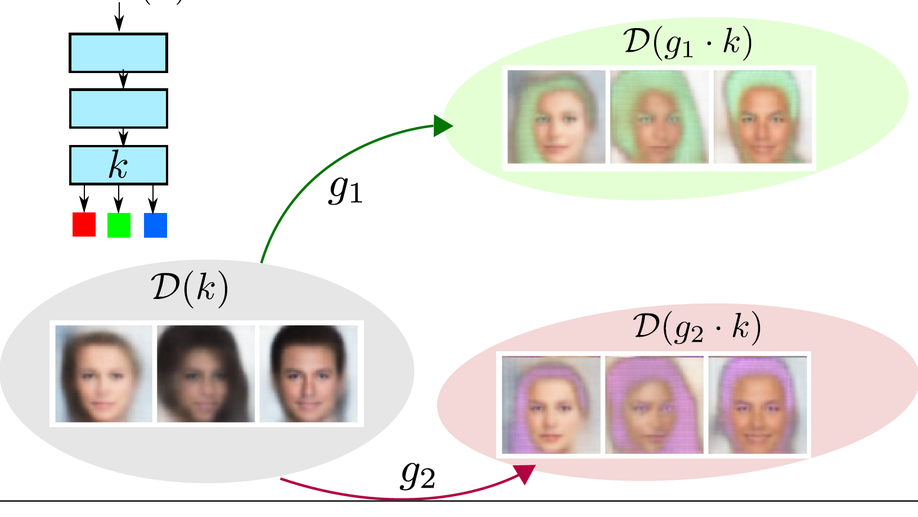

A theory of independent mechanisms for extrapolation in generative models

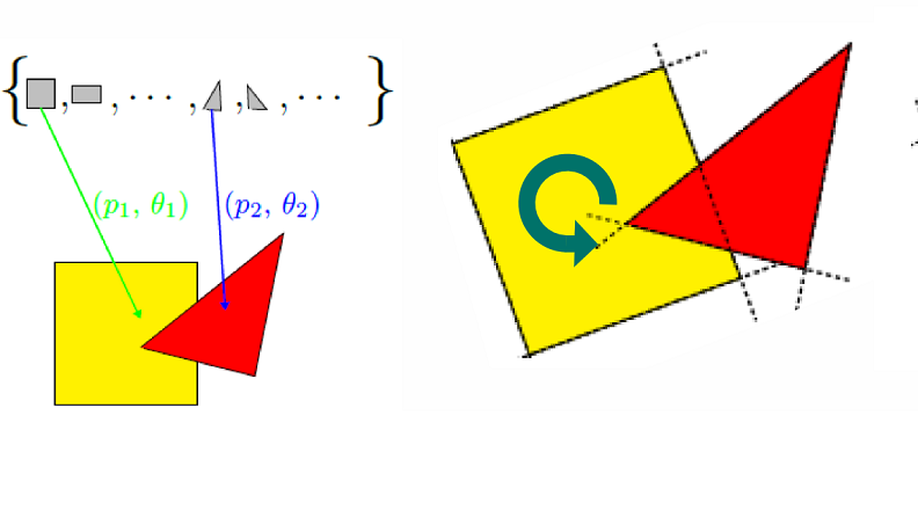

Groupe invariance principles for causal generative models

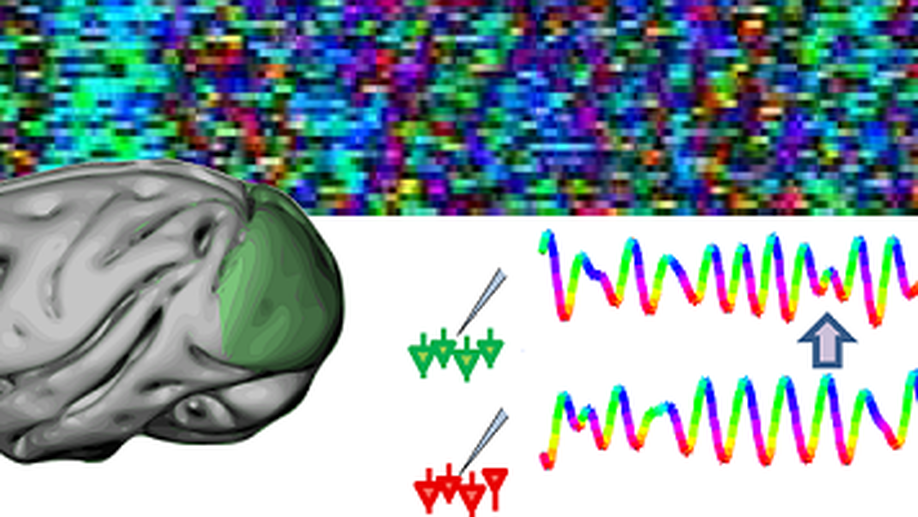

Biological systems demonstrate that highly complex structures can self-organize to adapt efficiently to changes in their environment. We hypothesize this relies on crucial regulatory mechanisms that maintain a form of homeostasis: an optimal internal balance between competing subsystems and functions. We use modeling, machine learning and theory to uncover these mechanisms in the mammalian brain, artificial neural networks and multi-agent systems.

Understanding the efficiency of autonomous systems

The core abilities of autonomous systems rely on multiple interacting subsystems, contributing to common goals with only partially available information. The complexity of such systems, both high-dimensional and non-linear, is a major obstacle to understanding their organization and function. We use computational modeling, machine learning, statistics and causality to address this challenge.

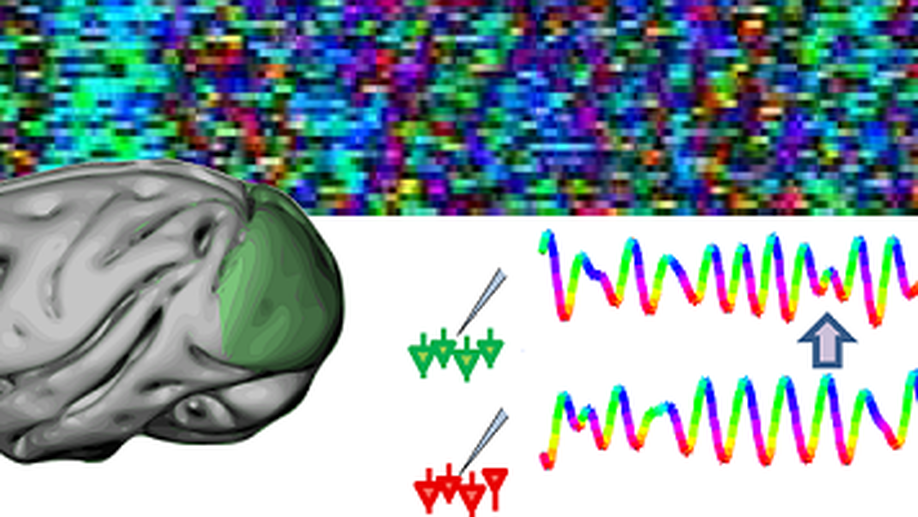

In the case of the mamalian brain, strong recurrent and bidirectional connectivity characterizes the circuits, leading to a complex dynamics where various modules cooperate at different levels to give rise, for example, to coherent behaviors and percepts. This complexity manifests itself as collective oscillations as well as more complex dymamical patterns such as Sharp-wave ripple complexes, that can be observed in electrical brain activity. These neural events are believed to play a key role in information processing, learning and behavior. Our research aims at designing better techniques to detect these events and understand their underlying mechanisms and computational role.

In order to address these questions, we put an emphasis on developing new Machine learning tools with strong theoretical foundations that are particularly well suited to capture the complexity of Biological signals. These include unsupervised learning algorithms to identify relevant patterns in large neural recording datasets, non-parametric statistical tools (based on kernel methods) to identify the complex statistical dependencies of biological signals, as well as causal inference methods to infer the underlying mechanisms generating the data. Importantly, our work also leads us to use models to investigate the principles underlying learning and plasticity in biological and artificial networks.

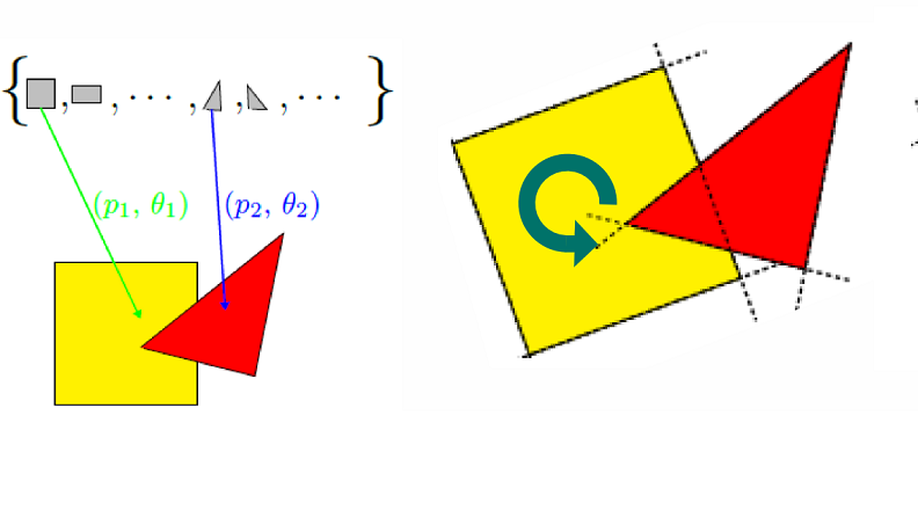

In parallel, the current intensive development of new artificial deep neural networks has lead to impressive successes, but the functioning of these architectures remains largely elusive due to an aspect shared with biological networks: their high dimensional connectivity. This provides us an opportunity to use our network analysis tools to uncover fundamental principles for such systems, and possibly relate them to biology. We are currently investigating causality and invariance principles to understand the structure of deep generative models and in particular assess their modularity.

From univariate to multivariate coupling between continuous signals and point processes: a mathematical framework, S. Safavi, N. K. Logothetis and M. Besserve. ArXiv preprint.

Dissecting the synapse- and frequency-dependent network mechanisms of in vivo hippocampal sharp wave-ripples, J. F. Ramirez-Villegas, K. F. Willeke, N. K. Logothetis and M. Besserve. Neuron 2018; 100:1016-19.

Diversity of sharp wave-ripple LFP signatures reveals differentiated brain-wide dynamical events, J. F. Ramirez-Villegas, N. K. Logothetis, M. Besserve. Proceedings of the National Academy of Sciences U.S.A 2015; 112:E6379-E6387.

Shifts of Gamma Phase across Primary Visual Cortical Sites Reflect Dynamic Stimulus-Modulated Information Transfer, M. Besserve, S. C. Lowe, N. K. Logothetis, B. Schölkopf, S. Panzeri. PLOS Biology 2015; 13, e1002257.

Towards a learning-theoretic analysis of spike-timing dependent plasticity, D. Balduzzi and M. Besserve, NIPS 2012.

A theory of independent mechanisms for extrapolation in generative models, M. Besserve, R. Sun, D. Janzing and B. Schölkopf, ArXiv preprint.

Counterfactuals uncover the modular structure of deep generative models, M. Besserve, A. Merhjou, R. Sun and B. Schölkopf, ICLR 2020.

Coordination via predictive assistants: time series algorithms and game-theoretic analysis, P. Geiger, M. Besserve, J. Winkelmann, C. Proissl and B. Schölkopf. UAI 2019.

Group invariance principles for causal generative models, M. Besserve, N. Shajarisales, B. Schölkopf and D. Janzing, Proceedings of the 21st International Conference on Artificial Intelligence and Statistics (AISTATS) 2018.

A theory of independent mechanisms for extrapolation in generative models, M. Besserve, R. Sun, D. Janzing and B. Schölkopf. ArXiv preprint.

From univariate to multivariate coupling between continuous signals and point processes: a mathematical framework, S. Safavi, N. K. Logothetis and M. Besserve. ArXiv preprint.

A central limit like theorem for Fourier sums, D. Janzing, N. Shajarisales and M. Besserve. arXiv preprint.

Dissecting the synapse- and frequency-dependent network mechanisms of in vivo hippocampal sharp wave-ripples, J. F. Ramirez-Villegas, K. F. Willeke, N. K. Logothetis and M. Besserve. Neuron 2018; 100:1016-19.

Parallel and functionally segregated processing of task phase and conscious content in the prefrontal cortex, V. Kapoor, M. Besserve, N.K. Logothetis and F. Panagiotaropoulos. Communications Biology 2018; 1.

Diversity of sharp wave-ripple LFP signatures reveals differentiated brain-wide dynamical events, J. F. Ramirez-Villegas, N. K. Logothetis, M. Besserve. Proceedings of the National Academy of Sciences U.S.A 2015; 112:E6379-E6387

Shifts of Gamma Phase across Primary Visual Cortical Sites Reflect Dynamic Stimulus-Modulated Information Transfer, M. Besserve, S. C. Lowe, N. K. Logothetis, B. Schölkopf, S. Panzeri. PLOS Biology 2015; 13, e1002257

Metabolic cost as an organizing principle for cooperative learning, D. Balduzzi, P.A. Ortega and M. Besserve. Advances in Complex Systems 2013; 16 :1350012

**Multimodal information improves the rapid detection of mental fatigue, F. Laurent , M. Valderrama, M. Besserve, M. Guillard, J.-P. Lachaux, J. Martinerie, G. Florence. Biomedical Signal Processing and Control 2013; 8 :400-8.

Hippocampal-Cortical Interaction during Periods of Subcortical Silence, N. K. Logothetis, O. Eschenko, Y. Murayama, M. Augath, T. Steudel, H. C. Evrard, M. Besserve and & A. Oeltermann. Nature 2012; 491 :547-53.

Extraction of functional information from ongoing brain electrical activity, M. Besserve & J. Martinerie. IRBM 2011; 32 :27-34.

Dynamics of excitable neural networks with heterogeneous connectivity, M. Chavez , M. Besserve & M. Le Van Quyen. Progress in Biophysics and Molecular Biology 2011; 105 :29-33

Improving quantification of functional networks with EEG inverse problem: evidence from a decoding point of view, M. Besserve, J. Martinerie & L. Garnero, Neuroimage 2011; 55 :1536-1547.

Causal relationships between frequency bands of extracellular signals in visual cortex revealed by an information theoretic analysis, M. Besserve, B. Schölkopf, N. K. Logothetis & S. Panzeri. Journal of Computational Neuroscience 2010; 29 :547-566.

Source reconstruction and synchrony measurements for revealing functional brain networks and classifying mental states, Laurent F , Besserve M, Garnero L, Philippe M, Florence G, Martinerie J., International Journal of Bifurcation and Chaos 2010; 20 :1703-1721.

Classification of ongoing MEEG signals, M. Besserve, K. Jerbi, F. Laurent, S. Baillet, J. Martinerie & L. Garnero. Biological Research 2007; 40 :415-437.

Prediction of performance level during a cognitive task from ongoing EEG oscillatory activities, M. Besserve, M. Phillipe, G. Florence, L. Garnero & J. Martinerie, Clinical Neurophysiology 2008 ; 119 :897-908.

Towards a proper estimation of phase synchronization from time series, M. Chavez, M. Besserve, C. Adam & J. Martinerie, Journal of Neuroscience Methods 2006 ;154 :149-160.

Counterfactuals uncover the modular structure of deep generative models, M. Besserve, A. Merhjou, R. Sun and B. Schölkopf. ICLR 2020.

Coordination via predictive assistants: time series algorithms and game-theoretic analysis, P. Geiger, M. Besserve, J. Winkelmann, C. Proissl and B. Schölkopf. UAI 2019.

Intrinsic disentanglement: an invariance view for deep generative models, M. Besserve, R. Sun and B. Schölkopf, Workshop on Theoretical Foundations and Applications of Deep Generative Models at ICML 2018.

Group invariance principles for causal generative models, M. Besserve, N. Shajarisales, B. Schölkopf and D. Janzing, Proceedings of the 21st International Conference on Artificial Intelligence and Statistics (AISTATS) 2018

Telling cause from effect in deterministic linear dynamical systems, N. Shajarisales, D. Janzing, B. Schölkopf and M. Besserve, ICML 2015.

Statistical analysis of coupled time series in the space of Kernel Cross-Spectral Density operators, M. Besserve, N.K. Logothetis and B. Schölkopf, NIPS 2013.

Towards a learning-theoretic analysis of spike-timing dependent plasticity. D. Balduzzi and M. Besserve, NIPS 2012.

Finding dependencies between frequencies with the kernel cross-spectral density, Besserve, M., D. Janzing, N. K. Logothetis & B. Schölkopf, International Conference on Acoustics, Speech and Signal Processing 2011.

Reconstructing the cortical functional network during imagery tasks for boosting asynchronous BCI, M. Besserve, J. Martinerie & L. Garnero, Second french conference on Computational Neuroscience, “Neurocomp08” 2008.

Non-invasive classification of cortical activities for Brain Computer Interface: A variable selection approach, M. Besserve, J. Martinerie & L. Garnero, 5th IEEE International Symposium on Biomedical Imaging (ISBI) 2008.

De l’estimation à la classification des activités corticales pour les Interfaces Cerveau-Machine, M. Besserve, L. Garnero & J. Martinerie, 21ème colloque GRETSI sur le traitement du signal et des images 2007.

Cross-spectral discriminant analysis for the classification of Brain Computer Interfaces, M. Besserve, L. Garnero & J. Martinerie, 3rd Internationnal IEEE EMBS Conference on Neural Engineering 2007.

Prediction of cognitive states using MEG and Blind Source Separation, M. Besserve, K. Jerbi, L. Garnero & J. Martinerie, Proceedings of the 15th International Conference on Biomagnetism, Vancouver, BC Canada, International Congress Series 2007 ;1300.

Analyse de la dynamique neuronale pour les Interfaces Cerveau-Machine : un retour aux sources, M. Besserve, PhD dissertation (in french)/Thèse de doctorat. Université Paris-Sud 11 22 Novembre 2007.