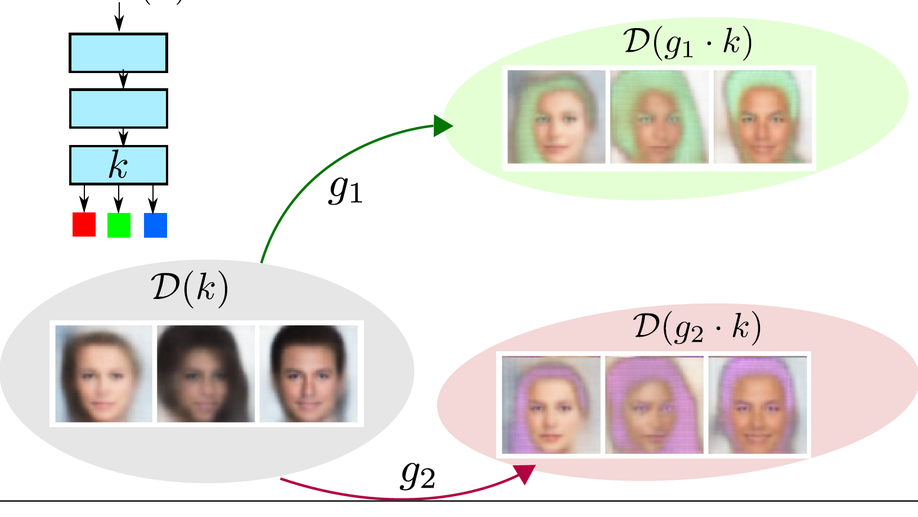

A theory of independent mechanisms for extrapolation in generative models

Generative models can be trained to emulate complex empirical data, but are they useful to make predictions in the context of previously unobserved environments? An intuitive idea to promote such extrapolation capabilities is to have the architecture of such model reflect a causal graph of the true data generating process, to intervene on each node of this graph independently of the others. However, the nodes of this graph are usually unobserved, leading to a lack of identifiability of the causal structure. We develop a theoretical framework to address this challenging situation by defining a weaker form of identifiability, based on the principle of independence of mechanisms.

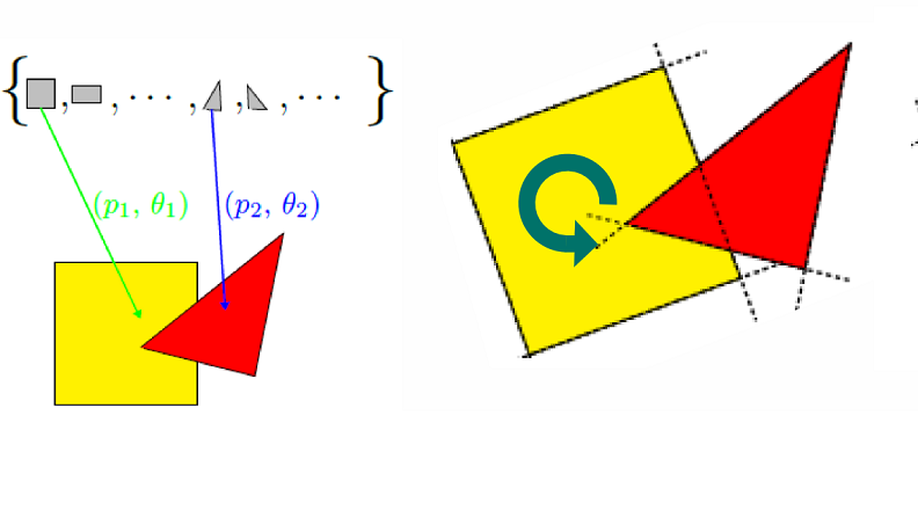

Groupe invariance principles for causal generative models

The postulate of independence of cause and mechanism (ICM) has recently led to several new causal discovery algorithms. The interpretation of independence and the way it is utilized, however, varies across these methods. Our aim in this paper is to propose a group theoretic framework for ICM to unify and generalize these approaches. In our setting, the cause-mechanism relationship is assessed by perturbing it with random group transformations. We show that the group theoretic view encompasses previous ICM approaches and provides a very general tool to study the structure of data generating mechanisms with direct applications to machine learning.

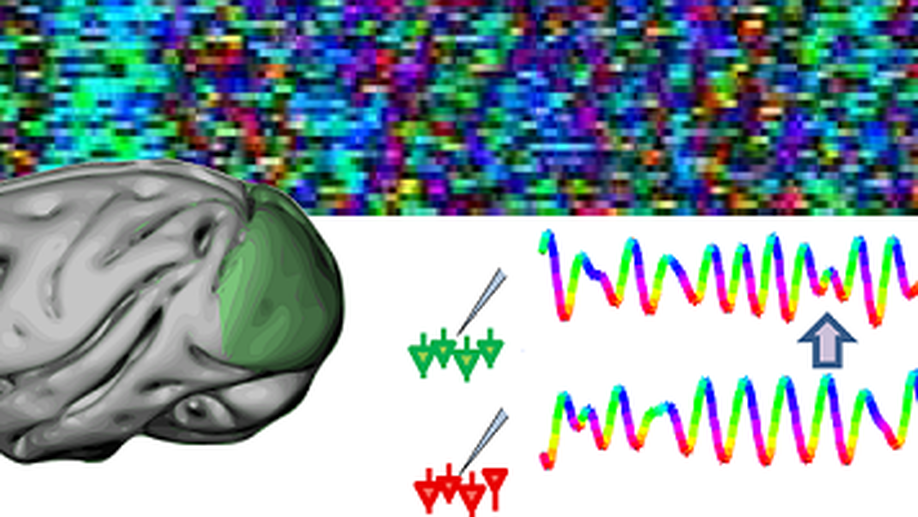

Shifts of Gamma Phase Reflect Dynamic Information Transfer

We investigate how oscillations of cortical activity in the gamma frequency range (50–80 Hz) may influence dynamically the direction and strength of information flow across different groups of neurons. We found that the arrangement of the phase of gamma oscillations at different locations indicated the presence of waves propagating along the cortical tissue were observed to propagate along the direction with the maximal flow of information transmitted between neural populations. Our findings suggest that the propagation of gamma oscillations may reconfigure dynamically the directional flow of cortical information during sensory processing.